引言

通过生成图片/视频数据高度吻合的详细的文本描述来构建海量的高质量视频文本对,使得训练出的模型指令遵循度高,非常重要。实际上无论图片/视频理解,还是图片/视频生成任务,都离不开高质量的图片/视频-文本数据。

比起静态图片,视频提供了更为丰富的动态视觉内容,包括动作,时间,变化以及实体之间的动态关系。分析这些复杂的视频,难度超过了传统图像理解模型。得益于近期视觉大模型的进展,越来越多的视觉多模态大模型支持视频理解。

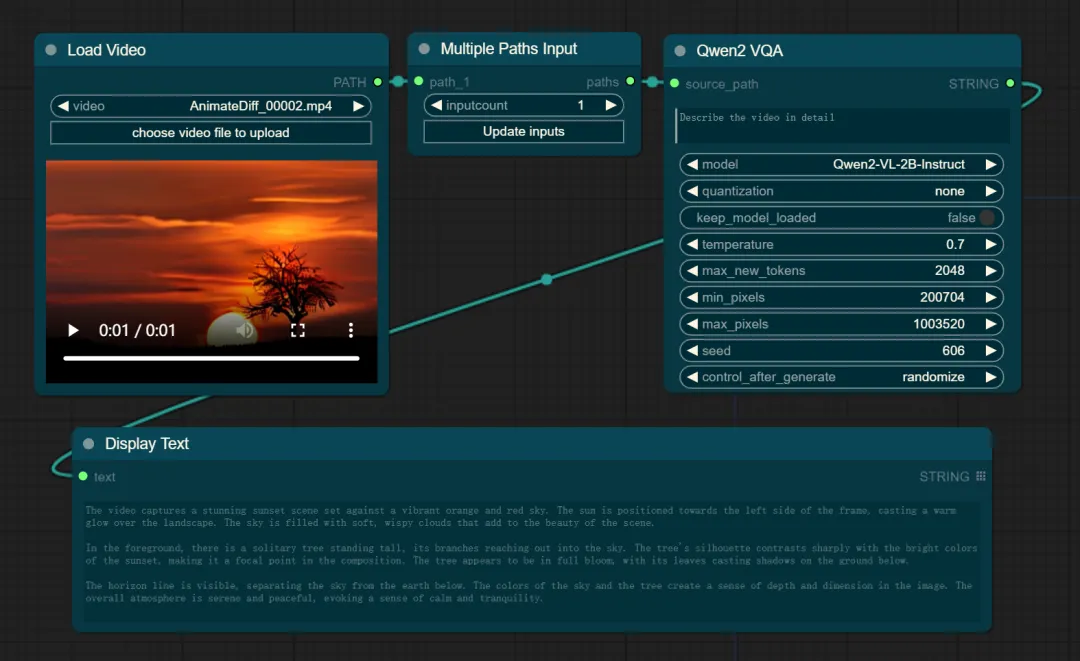

今天我们把ComfyUI工具和多模态LLM结合,在魔搭的免费算力上搭建出支持单图理解,多图理解,视频理解的WebUI界面,更好的支持开发者快速搭建一个视频/图片页面打标器。

参考链接:

Qwen2-VL:

https://github.com/IuvenisSapiens/ComfyUI_Qwen2-VL-Instruct

MiniCPM-V-2_6:

https://github.com/IuvenisSapiens/ComfyUI_MiniCPM-V-2_6-int4

最佳实践

环境配置和安装:

python 3.10及以上版本

pytorch 2.0及以上版本

建议使用CUDA 12.1及以上

本文在魔搭社区免费提供的GPU免费算力上体验:

添加图片注释,不超过 140 字(可选)

下载和部署ComfyUI

clone代码,并安装相关依赖:

!pip install git+https://github.com/huggingface/transformers

!pip install spandrel

!pip install qwen-vl-utils# #@title Environment Setup

from pathlib import Path

OPTIONS = {}

UPDATE_COMFY_UI = True #@param {type:"boolean"}

INSTALL_COMFYUI_MANAGER = True #@param {type:"boolean"}

INSTALL_CUSTOM_NODES_DEPENDENCIES = True #@param {type:"boolean"}

INSTALL_ComfyUI_Qwen2_VL_Instruct = True #@param {type:"boolean"}

OPTIONS['UPDATE_COMFY_UI'] = UPDATE_COMFY_UI

OPTIONS['INSTALL_COMFYUI_MANAGER'] = INSTALL_COMFYUI_MANAGER

OPTIONS['INSTALL_CUSTOM_NODES_DEPENDENCIES'] = INSTALL_CUSTOM_NODES_DEPENDENCIES

OPTIONS['INSTALL_ComfyUI_Qwen2_VL_Instruct'] = INSTALL_ComfyUI_Qwen2_VL_Instruct

current_dir = !pwd

WORKSPACE = f"{current_dir[0]}/ComfyUI"

%cd /mnt/workspace/

![ ! -d $WORKSPACE ] && echo -= Initial setup ComfyUI =- && git clone https://github.com/comfyanonymous/ComfyUI

%cd $WORKSPACE

if OPTIONS['UPDATE_COMFY_UI']:

!echo "-= Updating ComfyUI =-"

!git pull

if OPTIONS['INSTALL_COMFYUI_MANAGER']:

%cd custom_nodes

![ ! -d ComfyUI-Manager ] && echo -= Initial setup ComfyUI-Manager =- && git clone https://github.com/ltdrdata/ComfyUI-Manager

%cd ComfyUI-Manager

!git pull

if OPTIONS['INSTALL_ComfyUI_Qwen2_VL_Instruct']:

%cd ..

!echo -= Initial setup ComfyUI_VQA_CustomNodes =- && git clone https://github.com/IuvenisSapiens/ComfyUI_Qwen2-VL-Instruct.git

!echo -= Initial setup ComfyUI_VQA_CustomNodes =- && git clone https://github.com/IuvenisSapiens/ComfyUI_MiniCPM-V-2_6-int4.git

if OPTIONS['INSTALL_CUSTOM_NODES_DEPENDENCIES']:

!pwd

!echo "-= Install custom nodes dependencies =-"

![ -f "custom_nodes/ComfyUI-Manager/scripts/colab-dependencies.py" ] && python "custom_nodes/ComfyUI-Manager/scripts/colab-dependencies.py"

下载视觉多模态模型,并存放到models目录的相关子目录下。小伙伴们可以选择自己希望使用的模型并下载。

#@markdown ###Download standard resources

%cd /mnt/workspace/ComfyUI

### FLUX1-DEV

# !modelscope download --model=AI-ModelScope/FLUX.1-dev --local_dir ./models/unet/ flux1-dev.safetensors

!modelscope download --model=qwen/Qwen2-VL-2B-Instruct --local_dir ./models/prompt_generator/Qwen2-VL-2B-Instruct/

!modelscope download --model=OpenBMB/MiniCPM-V-2_6-int4 --local_dir ./models/prompt_generator/MiniCPM-V-2_6-int4/

使用cloudflared运行ComfyUI

!wget "https://modelscope.oss-cn-beijing.aliyuncs.com/resource/cloudflared-linux-amd64.deb"

!dpkg -i cloudflared-linux-amd64.deb

import subprocess

import threading

import time

import socket

import urllib.request

def iframe_thread(port):

while True:

time.sleep(0.5)

sock = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

result = sock.connect_ex(('127.0.0.1', port))

if result == 0:

break

sock.close()

print("\nComfyUI finished loading, trying to launch cloudflared (if it gets stuck here cloudflared is having issues)\n")

p = subprocess.Popen(["cloudflared", "tunnel", "--url", "http://127.0.0.1:{}".format(port)], stdout=subprocess.PIPE, stderr=subprocess.PIPE)

for line in p.stderr:

l = line.decode()

if "trycloudflare.com " in l:

print("This is the URL to access ComfyUI:", l[l.find("http"):], end='')

#print(l, end='')

threading.Thread(target=iframe_thread, daemon=True, args=(8188,)).start()

!python main.py --dont-print-server

导入工作流:

Qwen2-VL:

https://github.com/IuvenisSapiens/ComfyUI_Qwen2-VL-Instruct/blob/main/examples/Chat_with_video_workflow.json

MiniCPM-V-2_6:

https://github.com/IuvenisSapiens/ComfyUI_MiniCPM-V-2_6-int4/blob/main/examples/Chat_with_video_workflow_polished.json

添加图片注释,不超过 140 字(可选)

已为社区贡献663条内容

已为社区贡献663条内容

所有评论(0)