近期,字节跳动开源的Hyper-sd项目正式支持FLUX.1-dev了,目前支持8步lora和16步lora,和FLUX.1-dev默认的30步对比,速度提升了近4倍!同时,Hyper-sd官方还预告更少生成步数的LoRAs很快也会推出,一整个期待住了!

技术解析

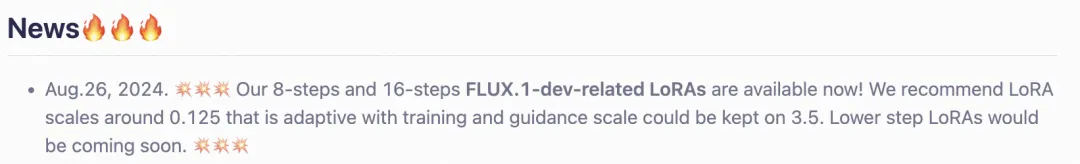

Hyper-SD的框架,旨在解决扩散模型(DMs)多步推理过程中的计算开销问题。该框架将ODE轨迹保持和重写的优势相结合,并在压缩步骤时维持近乎无损的性能。具体来说,它使用轨迹分段一致性蒸馏逐步进行一致蒸馏,在预定义的时间步段内保留原始ODE轨迹。

此外,它还利用人类反馈学习提高模型在低步数情况下的表现,并通过分数蒸馏进一步改善模型的低步数生成能力。实验和用户研究表明,Hyper-SD在SDXL和SD1.5等模型中取得了非常先进的性能。

魔搭社区模型链接:

https://modelscope.cn/models/bytedance/hyper-sd

HuggingFace模型链接:

https://huggingface.co/ByteDance/Hyper-SD

项目链接:

https://hyper-sd.github.io/

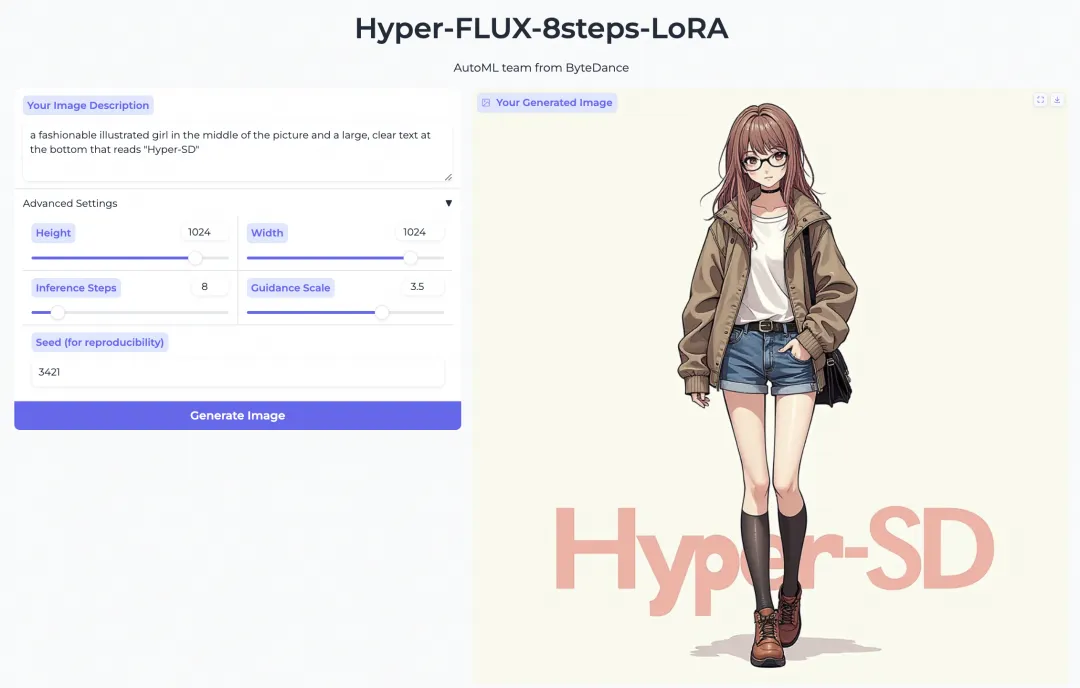

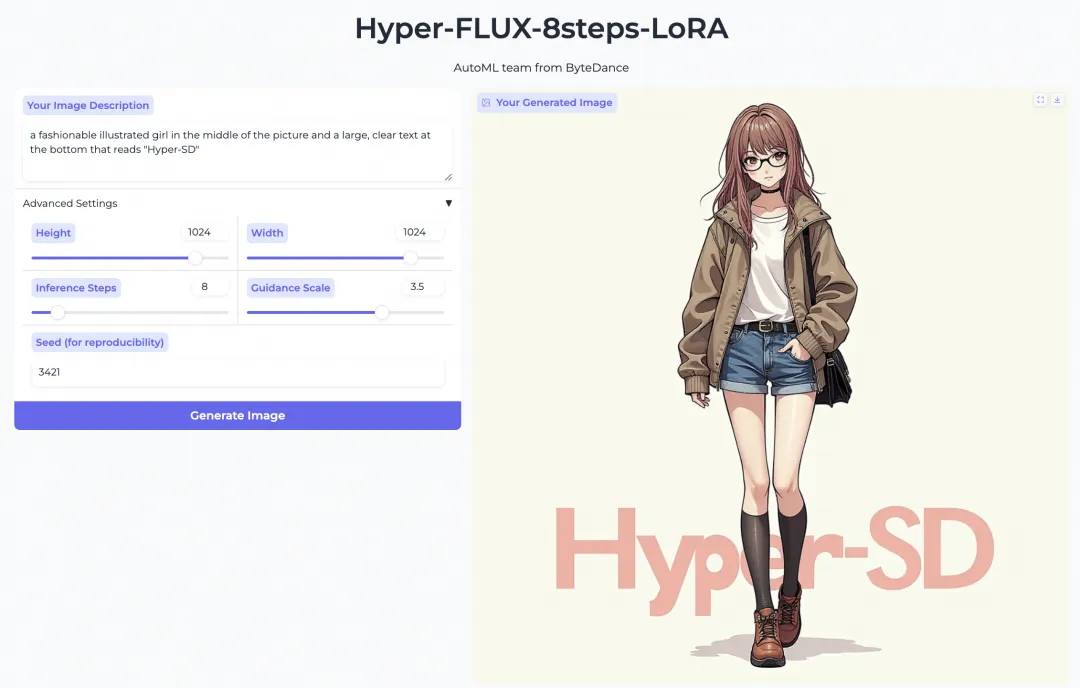

模型体验

点击上面链接和小程序端都可以体验哦

Diffusers模型推理

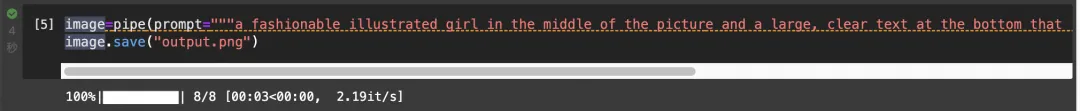

Diffusers推理示例代码(单卡A100):

import torch

from diffusers import FluxPipeline

from modelscope import snapshot_download

from modelscope.hub.file_download import model_file_download

base_model_id = snapshot_download("AI-ModelScope/FLUX.1-dev")

repo_name = model_file_download(model_id='ByteDance/Hyper-SD',file_path='Hyper-FLUX.1-dev-8steps-lora.safetensors')

# Load model, please fill in your access tokens since FLUX.1-dev repo is a gated model.

pipe = FluxPipeline.from_pretrained(base_model_id)

pipe.load_lora_weights(repo_name)

pipe.fuse_lora(lora_scale=0.125)

pipe.to("cuda", dtype=torch.float16)

image=pipe(prompt="""a photo of a cat, hold a sign 'I love Qwen'""", num_inference_steps=8, guidance_scale=3.5).images[0]

image.save("output.png")生图速度(3秒):

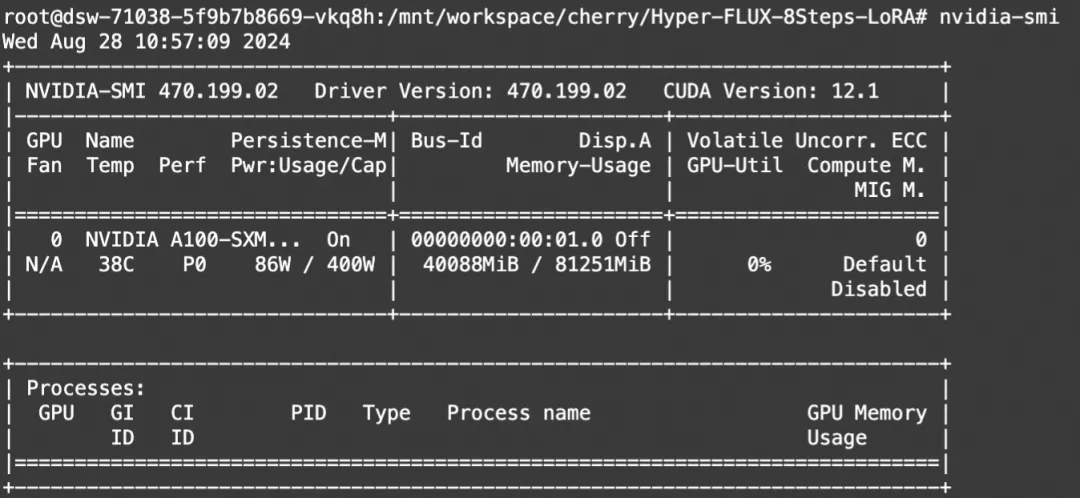

显存占用(40G):

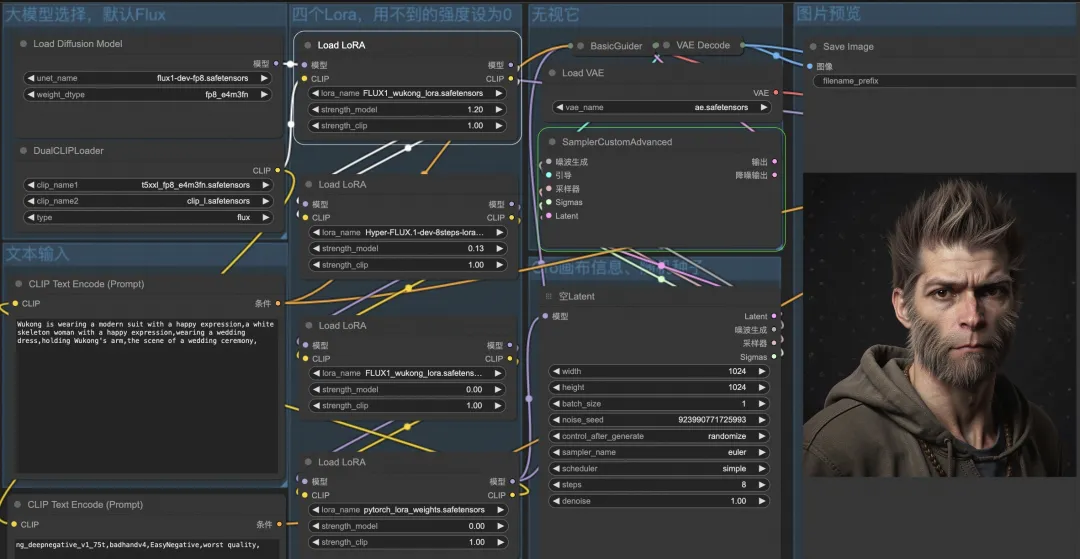

ComfyUI工作流体验

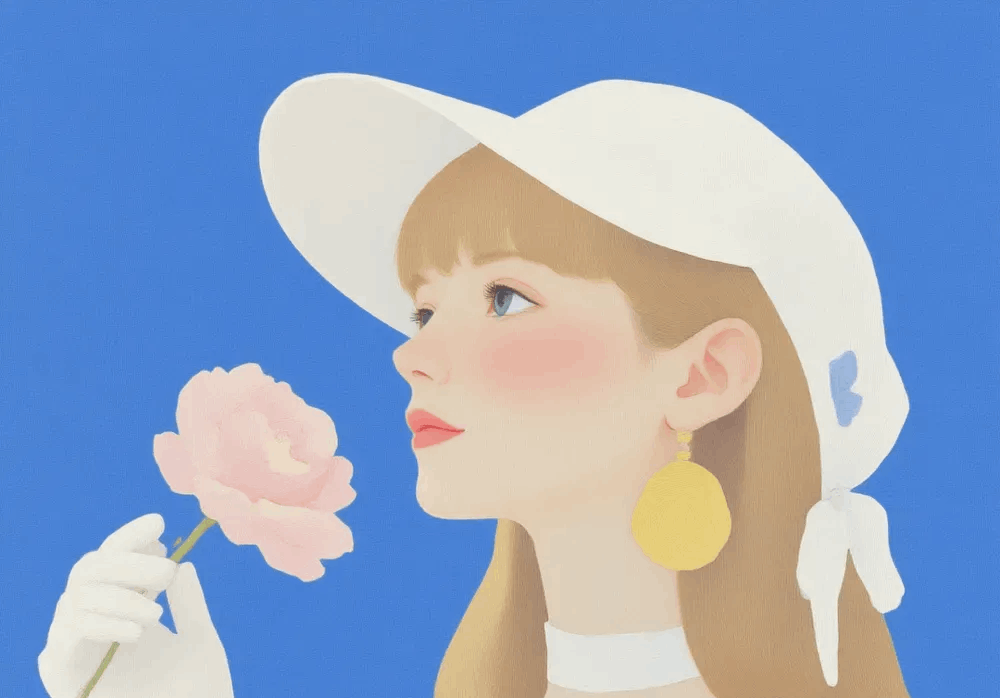

使用diffusers推理的显存占用较高,下面我们演示在魔搭免费算力(22G)通过ComfyUI使用Flux fp8模型+Hyper-SD实现秒级生图和多lora融合。

环境配置和安装:

-

python 3.10及以上版本

-

建议使用pytorch 2.3及以上版本

-

建议使用CUDA 12.1及以上版本

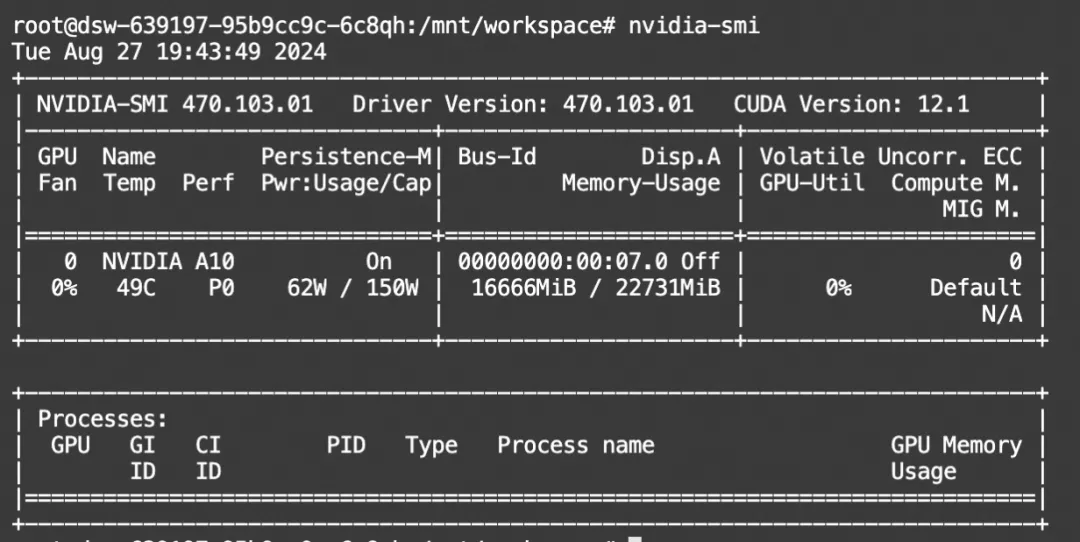

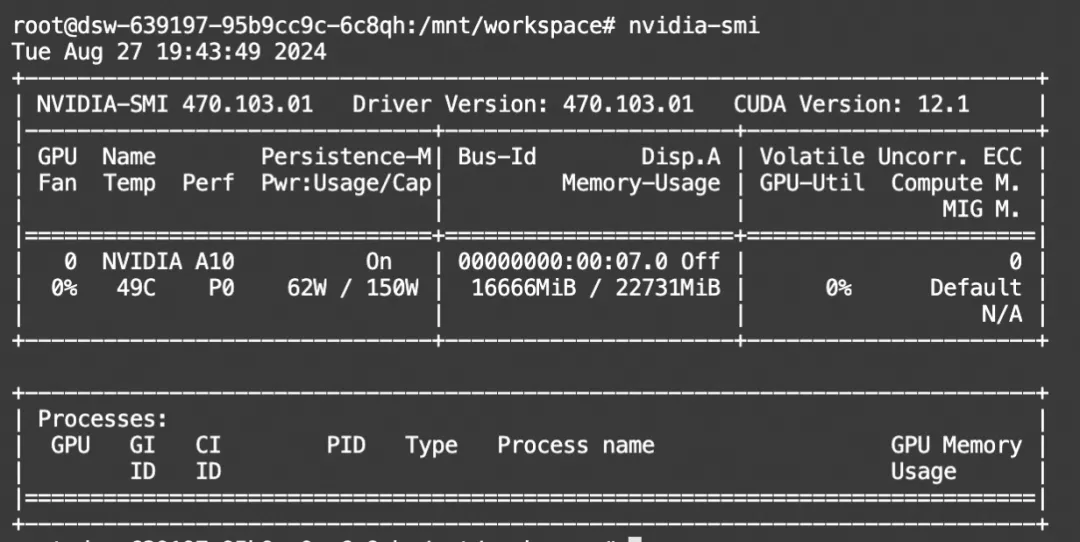

本文在魔搭社区免费提供的GPU免费算力上体验:

下载和部署ComfyUI

clone代码,并安装相关依赖,依赖链接如下:

-https://github.com/comfyanonymous/ComfyUI

-https://github.com/ltdrdata/ComfyUI-Manager

-https://github.com/Suzie1/ComfyUI_Comfyroll_CustomNodes.git

# #@title Environment Setup

from pathlib import Path

OPTIONS = {}

UPDATE_COMFY_UI = True #@param {type:"boolean"}

INSTALL_COMFYUI_MANAGER = True #@param {type:"boolean"}

INSTALL_CUSTOM_NODES_DEPENDENCIES = True #@param {type:"boolean"}

INSTALL_ComfyUI_Comfyroll_CustomNodes = True #@param {type:"boolean"}

INSTALL_x_flux_comfyui = True #@param {type:"boolean"}

OPTIONS['UPDATE_COMFY_UI'] = UPDATE_COMFY_UI

OPTIONS['INSTALL_COMFYUI_MANAGER'] = INSTALL_COMFYUI_MANAGER

OPTIONS['INSTALL_CUSTOM_NODES_DEPENDENCIES'] = INSTALL_CUSTOM_NODES_DEPENDENCIES

OPTIONS['INSTALL_ComfyUI_Comfyroll_CustomNodes'] = INSTALL_ComfyUI_Comfyroll_CustomNodes

OPTIONS['INSTALL_x_flux_comfyui'] = INSTALL_x_flux_comfyui

current_dir = !pwd

WORKSPACE = f"{current_dir[0]}/ComfyUI"

%cd /mnt/workspace/

![ ! -d $WORKSPACE ] && echo -= Initial setup ComfyUI =- && git clone https://github.com/comfyanonymous/ComfyUI

%cd $WORKSPACE

if OPTIONS['UPDATE_COMFY_UI']:

!echo "-= Updating ComfyUI =-"

!git pull

if OPTIONS['INSTALL_COMFYUI_MANAGER']:

%cd custom_nodes

![ ! -d ComfyUI-Manager ] && echo -= Initial setup ComfyUI-Manager =- && git clone https://github.com/ltdrdata/ComfyUI-Manager

%cd ComfyUI-Manager

!git pull

if OPTIONS['INSTALL_ComfyUI_Comfyroll_CustomNodes']:

%cd ..

!echo -= Initial setup ComfyUI_Comfyroll_CustomNodes =- && git clone https://github.com/Suzie1/ComfyUI_Comfyroll_CustomNodes.git

if OPTIONS['INSTALL_x_flux_comfyui']:

!echo -= Initial setup x-flux-comfyui =- && git clone https://github.com/XLabs-AI/x-flux-comfyui.git

if OPTIONS['INSTALL_CUSTOM_NODES_DEPENDENCIES']:

!pwd

!echo "-= Install custom nodes dependencies =-"

![ -f "custom_nodes/ComfyUI-Manager/scripts/colab-dependencies.py" ] && python "custom_nodes/ComfyUI-Manager/scripts/colab-dependencies.py"

!pip install spandrel下载模型(包含Flux.1基础模型fp8版本,encoder模型,vae模型,Lora模型如Hyper-FLUX.1-dev-8steps-lora等),并存放到models目录的相关子目录下。小伙伴们可以选择自己希望使用的模型并下载。

#@markdown ###Download standard resources

%cd /mnt/workspace/ComfyUI

### FLUX1-DEV

# !modelscope download --model=AI-ModelScope/FLUX.1-dev --local_dir ./models/unet/ flux1-dev.safetensors

!modelscope download --model=AI-ModelScope/flux-fp8 --local_dir ./models/unet/ flux1-dev-fp8.safetensors

### clip

!modelscope download --model=AI-ModelScope/flux_text_encoders --local_dir ./models/clip/ clip_l.safetensors

!modelscope download --model=AI-ModelScope/flux_text_encoders --local_dir ./models/clip/ t5xxl_fp8_e4m3fn.safetensors

### vae

!modelscope download --model=AI-ModelScope/FLUX.1-dev --local_dir ./models/vae/ ae.safetensors

### lora

#!modelscope download --model=FluxLora/flux-koda --local_dir ./models/loras/ araminta_k_flux_koda.safetensors

!modelscope download --model=FluxLora/Black-Myth-Wukong-FLUX-LoRA --local_dir ./models/loras/ pytorch_lora_weights.safetensors

!modelscope download --model=FluxLora/FLUX1_wukong_lora --local_dir ./models/loras/ FLUX1_wukong_lora.safetensors

!modelscope download --model=ByteDance/Hyper-SD --local_dir ./models/loras/ Hyper-FLUX.1-dev-8steps-lora.safetensors使用cloudflared运行ComfyUI

!wget "https://modelscope.oss-cn-beijing.aliyuncs.com/resource/cloudflared-linux-amd64.deb"

!dpkg -i cloudflared-linux-amd64.deb

%cd /mnt/workspace/ComfyUI

import subprocess

import threading

import time

import socket

import urllib.request

def iframe_thread(port):

while True:

time.sleep(0.5)

sock = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

result = sock.connect_ex(('127.0.0.1', port))

if result == 0:

break

sock.close()

print("\nComfyUI finished loading, trying to launch cloudflared (if it gets stuck here cloudflared is having issues)\n")

p = subprocess.Popen(["cloudflared", "tunnel", "--url", "http://127.0.0.1:{}".format(port)], stdout=subprocess.PIPE, stderr=subprocess.PIPE)

for line in p.stderr:

l = line.decode()

if "trycloudflare.com " in l:

print("This is the URL to access ComfyUI:", l[l.find("http"):], end='')

#print(l, end='')

threading.Thread(target=iframe_thread, daemon=True, args=(8188,)).start()

!python main.py --dont-print-serverload ComfyUI流程图链接

Hyper-SD单lora加速生图:

https://modelscope.oss-cn-beijing.aliyuncs.com/resource/workflow-flux-hypersd-816-steps.json

Hyper-SD多lora融合加速生图:

https://modelscope.oss-cn-beijing.aliyuncs.com/resource/FLUX.1-multilora.json

感谢社区开发者分享的流程图

Hyper-SD单lora加速生图:

https://openart.ai/workflows/bulldog_fruitful_46/flux-hypersd-816-steps

Hyper-SD多lora融合加速生图:

https://www.liblib.art/modelinfo/ff3f9fe8c41e4c8a9780c728a85f1643

单lora流程图如下:

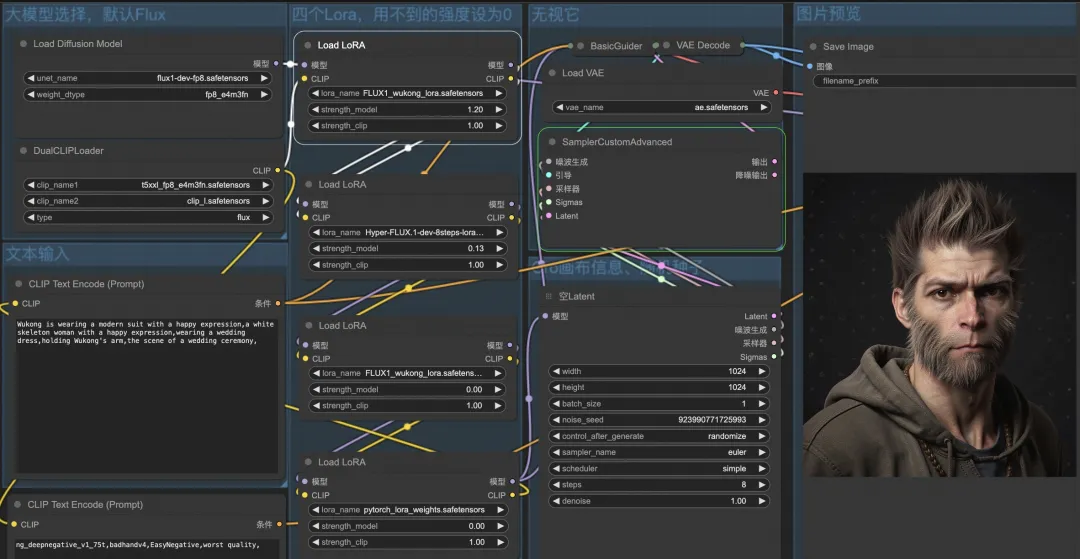

多lora流程图如下:

显存占用(17G):

注意,上传图片后,需要检查图片中的模型文件和下载存储的模型名称是否一致,可直接一键点击如ckpt_name关联到存储的模型文件名字。

点击链接👇,即可跳转模型~

https://modelscope.cn/models/bytedance/hyper-sd?from=csdnzishequ_text?from=csdnzishequ_text

已为社区贡献645条内容

已为社区贡献645条内容

所有评论(0)